You are here

The Global Race for Artificial Intelligence: Weighing Benefits and Risks

British science fiction writer and futurist, Arthur C. Clarke once said, “any sufficiently advanced technology is indistinguishable from magic”. Artificial Intelligence (AI) brings in a host of real-world applications which had earlier merely been a subject of science fiction novels or movies. AI empowered cars are already under rigorous testing and they are quite likely to ply on the roads soon. The social humanoid robot Sophia became a citizen of Saudi Arabia in 2017. Apple’s intelligent personal assistant, Siri, can receive instructions and interact with human beings in natural language. Autonomous weapons can execute military missions on their own, identify and engage targets without any human intervention. In the words of John McCarthy, AI, is the “science and engineering of making intelligent machines, especially intelligent computer programs”. As a burgeoning discipline of computer science, AI enables intelligent machines that can execute functions, similar to human abilities like speech, facial, object or gesture recognition, learning, problem solving, reasoning, perception and response.

British science fiction writer and futurist, Arthur C. Clarke once said, “any sufficiently advanced technology is indistinguishable from magic”. Artificial Intelligence (AI) brings in a host of real-world applications which had earlier merely been a subject of science fiction novels or movies. AI empowered cars are already under rigorous testing and they are quite likely to ply on the roads soon. The social humanoid robot Sophia became a citizen of Saudi Arabia in 2017. Apple’s intelligent personal assistant, Siri, can receive instructions and interact with human beings in natural language. Autonomous weapons can execute military missions on their own, identify and engage targets without any human intervention. In the words of John McCarthy, AI, is the “science and engineering of making intelligent machines, especially intelligent computer programs”. As a burgeoning discipline of computer science, AI enables intelligent machines that can execute functions, similar to human abilities like speech, facial, object or gesture recognition, learning, problem solving, reasoning, perception and response.

The term AI was coined in 1956, and the early research in the 1950s was confined to problem solving and symbolic methods. The interest of the US Department of Defense led it towards mimicking basic human reasoning during the 1960s.1 The use of ‘neural networks’ dominated the period from 1950 to 1970s. AI research further graduated towards ‘machine learning’ algorithms from 1980s till around 2010. The US Defense Advanced Research Projects Agency (DARPA) developed intelligent personal assistants in 20032 , long before Siri, Alexa or Cortana came into existence. AI has made inroads to automation and decision support systems to complement or augment human abilities. The technology of AI in the present times is witnessing ‘deep learning’. The futuristic applications such as self-driving cars rely heavily on deep learning and natural language processing. As an emerging technology segment, deep learning uses neural networks and leverages advancing computing power to detect complex patterns in large data sets. Strides in supercomputing and Big Data analytics are further enhancing AI applications relating to advanced training or learning.

From Labs to the Real World

AI enables machines to think intelligently, somewhat akin to the intelligence human beings employ to learn, understand, think, decide, or solve a problem in their daily personal or professional lives. Intelligence is intangible. The present generation of computing systems perform generation, storage and analysis of data. AI enhances the ability of computer systems to learn from their experiences over time, makes them capable of reasoning, perceiving relationships and analogies, helps solve problems, as well as respond in natural languages and adapt to new conditions. AI as an ensemble of a wide spectrum of disciplines from computer science, biology, linguistics and mathematics etc., allows machines to sense and comprehend their surroundings and act according to their own intelligence or learning.3 These intelligent machines, with the explosion of digital data and augmenting computational power, employ advanced algorithms to enable collaborative and natural interactions between human beings and machines to extend the human ability to sense, learn and understand.4

Post Second World War, research interests of militaries intensified in the domains of cryptography and computing, which gave the necessary thrust to AI. In 1950, Alam Turing, a mathematician at Cambridge University, raised the much relevant question “Can machines think?”5 His anticipation of today’s machine learning and deep learning, along with the seminal work of Warren McCulloch and Walter Pitts in artificial neural networks, is foundational to AI. More than six decades ago, the Dartmouth Summer Research Project on Artificial Intelligence in 1956, created AI as a research discipline.6 Backed by military interests and funding in the initial decades, AI further matured in the academic environment at Massachusetts Institute of Technology7 , Carnegie Mellon University8 , and Stanford9 , institutions which continue to hold the top rankings in AI research, amongst others.

The present wave of enthusiasm in AI is backed by the industry, with Apple, Amazon, Google, Facebook, IBM, Microsoft and Baidu in the lead. Automotive industry is also unleashing benefits of AI for self-driving cars, led by Tesla, Mercedez-Benz, Google and Uber. Prominent advances have been made in facial recognition and verification, with algorithms developed by Google for GoogLeNet, Facebook for DeepFace and Microsoft asFace API for Azure. Facial detection has instated deep interest from law enforcement and security agencies. China is known to be building a massive facial recognition system, connected with its surveillance camera networks, to assist in detecting criminals and fugitives.

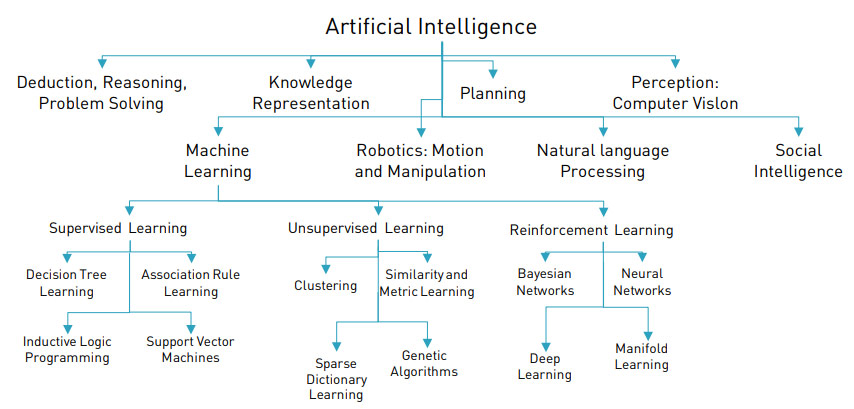

Classification of stream under Artificial Intelligence

Source: Ajit Nazre and Rahul Garg, “A Deep Dive in the Venture Landscape of Artificial Intelligence and Machine Learning.”

AI systems can learn on their own. Rather than depending upon pre-programmed set of instructions or pre-defined behavioural algorithms, they can learn from their interactions or experiences and thereby enhance their capabilities, knowledge and skills.10 AI is unveiling novel and astonishing applications in both civilian and defence domains for a host of operations which have essentially been a preserve of human beings. AI is widely acknowledged as a technological game changer. At the lower end, Smartphone apps and social media platforms have already begun using AI for face recognition or content translation from one language to another. High-end applications include IBM’s Watson, an AI ecosystem which can sift through a wide variety of data from a broad range of sources to answer questions posed in natural language. AI is finding path-breaking applications in fields as diverse as healthcare and life sciences to data analysis, cyber security and finance. AI is extensively being integrated in the wider segments relevant for the general users, technology enthusiasts, industry, governments and the armed forces. Some of them are discussed below:

- Gaming industry, where AI empowered computers can think of a large number of possible positions in games such as chess, poker, and go. These computers can enhance their own intelligence by competing with human beings, as well as test the skills of the human beings who are playing against these AI enabled computers, in games or simulations which require greater mathematical and strategic depth.

- Natural language processing to facilitate linguistic interaction between the computers and human beings for conversation. Computers with natural language processing capability can understand and generate human language, including speech, imitating human capabilities of listening, comprehending, thinking and responding.

- Vision and Voice systems to interpret and comprehend visual inputs such as images, clinical diagnosis and facial recognition or voice inputs to recognize the source of the sound. Computers equipped with vision systems can recognize patterns and identify objects in a picture or video, in effect interpret their surroundings. Also, handwriting recognition algorithms can comprehend handwritten letters and convert them into editable text or any other desired format.

- Law enforcement or internal security requirements for detecting and recognizing individuals or criminals, with multitudes of data streaming from police databases or the network of surveillance cameras.

- Healthcare industry to design optimized treatment plans, assistance in repetitive jobs, data management for medical records, or even assistance in clinical decision making with better analysis of diagnostics and interpretation of clinical laboratory results.

- Banking and financial services for fraud detection using advanced algorithms to identify patterns in transactions and consumer behaviors which are risk prone.

- Automotive industry is already using AI algorithms to enhance fuel efficiency and safety in vehicles to build features such as automatic braking, collision avoidance systems, alerts for pedestrian and cyclists, and intelligent cruise controls. AI is also helping insurance providers arrive at better risk assessment.

- While private enterprises are using AI in their IT functions, technology companies are charting out the plans to extend the applications to marketing, customer service, finance, human resources and strategic planning.11

- AI is also changing the ways militaries command, train, and deploy their forces. Modern day surveillance techniques generate volumes of data and imagery from an array of platforms, such as Unmanned Aerial Vehicles, Synthetic Aperture Radars, Airborne Early Warning Systems and Satellites. AI is helping the battlefield commanders to identify the hidden patterns at an incredible speed with high precision and accuracy. These systems can augment human strategic analysis. Autonomous weapons can also alter symmetry in the battlefield. The very idea and concept of Lethal Autonomous Weapons (LAW) has, however, met with resistance owing to ethical and humanitarian considerations. But few of the militaries are known to be working on these aspects of AI.

- AI has many peace time applications as well. It is being used to train soldiers and pilots, simulate war-game, synthesize information from surveillance systems and address critical problems in optimizing logistics, fleet management and maintenance.

Potential Benefits and Risks for India

The global community hopes to walk the tight rope balancing the breakthroughs in AI with rising threats from it to the well-being of human race. Technology in AI has advanced much more rapidly as compared to the international legal and ethical responses. AI has distinctive ‘dual-use’ nature. While its benefits for the humanity are immense, they all come with the risks from a multitude of factors. The situation is not about human vs. machines, but about harnessing camaraderie between humans and machines to make better decisions. AI has also opened up a geopolitical debate and intense competition among nation states.

This is also being termed as a ‘Sputnik Moment’ as Russia and China are challenging the pre-eminence of US in the next generation of technology development. Russian President Vladimir Putin has stated that “whoever becomes the leader in this sphere will become the ruler of the world.”12 The Chinese government in July 2017 rolled out its New Generation AI Development Plan to emerge as a center for AI innovation and a global leader in AI technology and applications by 2030.13 In addition to the interests of the government and military in China, the private sector and academia is also giving a tough competition. In terms of the number of publications on AI in scientific journals from 1996 to 2016, China stands at the first rank, ahead of the US, Japan, UK and Germany.14 As an indicator of the increasing Chinese interests and investments in AI research, China’s throughput in scientific publications has increased in volume. However, the quality of these publications is questionable, as it scores low on citation impact. China’s premier private sector entities, Alibaba, Baidu and Tancent, also have a heads on competition with Google, Microsoft and IBM.

The armed forces of US and China have already invested billions of dollars to develop LAW, intending to gain strategic and tactical advantage over each other. This runs the risks of an arms race. Similar to the support of chemists and biologists for international agreements prohibiting chemical and biological weapons, leading robotics and AI pioneers have called on the United Nations to ban the development and use of LAWs15.

India fares average in the surging competition for AI technology development. Research output from India in international journals ranks at 7, and the numbers are one fifth of that of China. There is no clearly stated policy document or vision statement for AI development. However, in February 2018, the Department of Defence Production has constituted a 17-member task-force to study the use of AI for both military applications and technology-driven economic growth, with representation from the National Cyber Security Coordinator, armed forces, Indian Space Research Organisation, Atomic Energy Commission and Ministry of Defence.16 The potential benefits are plenty, from basic sectors like healthcare, governance and economy to the specialized ones like foreign policy and defence and security.

As India is poised for reforms in governance, AI can actually help with process optimization and cost savings for the government, in addition to solving some strategic problems or assisting in decision making. Economic growth is vital for development, and the next generation of economic growth is anticipated to be fuelled by technologies relating to big data, block chain, quantum computing and AI. These game changing technologies will spur innovation, create value for the investors, generate specialized job domains and as a result, propel economic growth. India has one of the world’s largest automotive industries, with a significant production and consumption base. AI applications have vast scope in the automotive sector, ranging from enhancing fuel efficiency to passenger safety to the concept of self-driving vehicles.

Healthcare sector in India is burgeoning with innovation and demand, having business models unique to the Indian requirements and spending power. AI can augment the potential of government and private sector to deliver healthcare services and products with improved drug safety, better diagnosis and analysis of clinical reports for preventive and accurate treatment. More advanced applications of AI extend to the domains of foreign, defence and security policies. Deep learning in AI can unravel futuristic functions by augmenting decision making ability of the humans with access to the information derived from large data sets.

Summary of Potential Benefits of AI and Risks

Sector |

Potential Benefits | Potential Risks |

|

Governance |

Process Optimization & Cost Saving Decision Making & Problem Solving Human Resources Management |

Lack of Technical Competence Inability to Synchronize Goals/Expectations Dependence on Foreign Technology |

|

Economy |

Next Generation of Economic Growth Spurring Innovation Value and Job Creation |

Economic Competition & Espionage Threats to Intellectual Property Loss of Conventional Jobs |

|

Automotive Industry |

Self-driving Cars Enhanced fuel efficiency Enhances Safety Features Optimize Logistics & Supply Chain |

Regulatory Challenges Overdependence on Technology Software Error, Defect or Failure Susceptible to Hacking/Interference |

|

Defence & Security |

Decision Making (Tactical & Strategic) Trainings and War-gaming Logistics, Fleet Management Periodic Maintenance Intelligence Analysis Face Recognition & Crime Prevention |

Ethical& Legal Concerns from LAWs Dependence on Foreign Technology Human Safety & Security Software Error, Defect or Failure Potential Weapons Arms Race |

|

Foreign Policy |

Decision Making Scenario Analysis Analysis of Historical Data/Events Negotiations Information Analysis Public Diplomacy |

Lack of Cognitive Data for Deep Learning Multilateral Rules of the Road Technology Acceptance in Decision Making Lack of Data from Other Countries Dependence on Foreign Technology Potential Weapons Arms Race |

|

Healthcare |

Drug Discovery and Safety Diagnosis and Lab Results Analysis Preventive Care Insurance Risk Assessment |

Training Doctors and Paramedical Staff Generating Awareness Acceptance of AI in Medical Practices Technology Affordability |

Defence and foreign policy decisions are based on immense cognitive and intangible skillsets. As AI evolves further, it could play a vital role in analyzing large data sets, intelligence inputs, imagery from satellites or other airborne platforms, scenarios or even draw cognitive deductions from the historical records of foreign policy decisions or deployment of military resources during previous wars. Such detailed analyses could also supplement individual and organisational ability during bilateral or multilateral negotiations, and military standoffs or geopolitical conflicts. AI is also being used for crime prevention as facial detection and recognition technology has made strides.

Akin to any advanced technology, AI also has its own set of risks. AI has to meet the first and foremost challenge of acceptability with the users from the government, public sector and the armed forces, or even the private sector. As users of AI, their interest in the technology augmenting their own ability, and not posing a threat, is quite pertinent. Technical competence in this fast-paced sector, primarily in the case of government, could be a road block. AI can better adapt to the goals and expectations of the Indian decision makers, if the technology development is indigenous. Foreign dependence in this case would be detrimental and unproductive.

AI has set off an economic and technological competition, which will further intensify. Any delay in recognizing the benefits and in innovating, runs the risk of pushing India to the early majority, late majority or even towards laggards in the technology adoption bell curve, limiting its ability to draw the economic advantage. One of the major risks arises from LAWs, owing to ethical considerations, human safety concerns and the perils of an arms race. LAWs operate without human intervention, and there is formidable challenge in distinguishing between combatants and non-combatants, which is a subject of human judgment.

The first meeting of the Convention on Certain Conventional Weapons group of governmental experts (GGE) on lethal autonomous weapons systems was held in November 2017 under the chairmanship of India.17 India has abundant diplomatic experience in arms control, which possibly AI algorithms can ‘deep learn’ and simulate to chart out a better arms control strategy for LAWs. More than a technology developer or consumer, India can play a vital role in defining the multilateral rules of the road and help setting up of best ethical standards to dissuade any arms race in LAWs, ensuring safe and beneficial Artificial Intelligence for all.

Views expressed are of the author and do not necessarily reflect the views of the IDSA or of the Government of India.

- 1. “Artificial Intelligence: What it is and why it matters”, https://www.sas.com/en_us/insights/analytics/what-is-artificial-intellig..., accessed on 15 February 2018.

- 2. “Cognitive Assistant that learns and organizes”, Artificial Intelligence Centre at SRI International, http://www.ai.sri.com/project/CALO, accessed on 15 February 2018.

- 3. Accenture, “Artificial Intelligence: The Future of Business”, https://www.accenture.com/in-en/artificial-intelligence-index, accessed on 15 February 2018.

- 4. Microsoft, “Artificial Intelligence”, https://www.microsoft.com/en-us/research/research-area/artificial-intelligence/, accessed on 15 February 2018.

- 5. A. M. Turing, “Computing Machinery and Intelligence”, Mind, Vol. 59, No. 236, pp. 433-460.

- 6. “The Dartmouth Artificial Intelligence Conference”, https://www.dartmouth.edu/~ai50/homepage.html, accessed on 17 February 2018.

- 7. “Mission and History”, MIT Computer Science & Artificial Intelligence Lab, https://www.csail.mit.edu/about/mission-history, accessed on 17 February 2018.

- 8. “Artificial Intelligence”, Carnegie Mellon University Computer Science Department, https://www.csd.cs.cmu.edu/research-areas/artificial-intelligence, accessed on 17 February 2018.

- 9. “Stanford Artificial Intelligence Laboratory”, http://ai.stanford.edu/, accessed on 17 February 2018.

- 10. >R L Adams, “10 Powerful Examples of Artificial Intelligence in Use Today”, Forbes, 10 January 2017, https://www.forbes.com/sites/robertadams/2017/01/10/10-powerful-examples-of-artificial-intelligence-in-use-today/#1e4867ad420d, accessed on 17 February 2018.

- 11. Based on “Global Trend Study on AI” conducted by Tata Consultancy Services, https://www.tcs.com/artificial-intelligence-to-have-dramatic-impact-on-business-by-2020, accessed on 19 February 2018.

- 12. David Meyer, “Vladimir Putin Says Whoever Leads in Artificial Intelligence Will Rule the World”, Fortune, 04 September 2017, http://fortune.com/2017/09/04/ai-artificial-intelligence-putin-rule-world/, accessed on 19 February 2018.

- 13. Zhang Dongmiao, “China maps out AI development plan”, Xinhua, 20 July 2017, http://www.xinhuanet.com/english/2017-07/20/c_136459382.htm, accessed on 19 February 2018 and “Notice of the State Council on Printing and Distributing a New Generation of Artificial Intelligence Development Plan”, 08 July 2017,http://www.gov.cn/zhengce/content/2017-07/20/content_5211996.htm, accessed on 19 February 2018.

- 14. “Scimago Journal and Country Rank”, http://www.scimagojr.com/countryrank.php?category=1702&area=1700, accessed on 17 February 2018.

- 15. Samuel Gibbs, “Elon Musk leads 116 experts calling for outright ban of killer robots”, The Guardian, 20 August, 2017, https://www.theguardian.com/technology/2017/aug/20/elon-musk-killer-robots-experts-outright-ban-lethal-autonomous-weapons-war, accessed on 19 February 2018.

- 16. Pranav Mukul, “Task force set up to study AI application in military”,The Indian Express, 03 February 2018,http://indianexpress.com/article/technology/tech-news-technology/task-force-set-up-to-study-ai-application-in-military-5049568/, accessed on 19 February 2018.

- 17. “Report of the 2017 Group of Governmental Experts on Lethal Autonomous Weapons Systems (LAWS)”, CCW/GGE.1/2017/CRP.1, Geneva, 20 November 2017, p. 1.

| Attachment | Size |

|---|---|

| 342.08 KB |